Unlocking the Power of Analytics

Overcoming Common Analytics Challenges

Data analytics has become an essential component of modern business strategy. It offers insights into customer behavior, sales trends, and other key performance metrics that can inform decision-making and help organizations achieve goals. However, despite the benefits of analytics, many businesses face common challenges that prevent them from unlocking its full potential.

Poor Data Quality

Data quality should be a top consideration for any analytics team or initiative. To ensure any analysis is reliable, the underlying data must be accurate, complete, consistent, and timely. While no analysis begins with intentions of poor quality data, many of the data analytics challenges below either cause poor data quality or increase opportunities for data degradation.

Data is a business asset only if it is managed well—otherwise, it is a liability.- Prashanth Southekal

First, data quality is never 100%. Awaiting perfection ensures that decisioning will never occur. Moreover, data value changes based on the context in the data lifecycle (DLC). The value tends to increase as the DLC moves across the four stages: data capture, data integration, data science, and decision science. Initial data is transactional, while later data is transformative for decision-making.

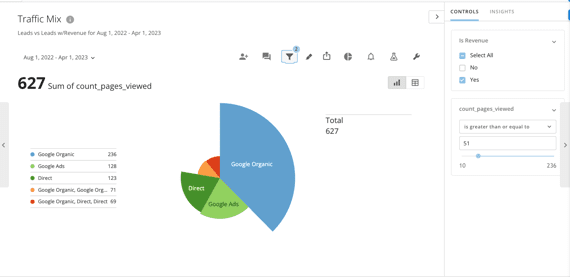

If a dataset includes missing values or incomplete records, the conclusions drawn may have a low reliability or bias. A marketing analysis of organic traffic that fails to capture significant traffic due to missing source labels will lead to a flawed understanding of current organic strength. Conclusions drawn will misrepresent actual traffic, and the actions taken may further reduce brand performance.

More subtle data quality issues include inconsistent data that is not standardized across sources. As many businesses’ data is collected through multiple siloed platforms, the methodology, labeling, and even cardinality levels may differ. Failing to standardize before analysis may render the data unreliable and potentially costly. For example, an international company might capture data in a number of formats, currencies, and accounting methods. The resulting analysis decisions could create financial losses.

Finally, getting current, timely data is necessary to ensure reliability. If a brand is launching a large retargeting effort, but the data is dated, customers who have already purchased might be targeted. Similarly, new contacts not recorded in the dataset will be missed opportunities. The result is annoyed customers and missed opportunities for revenue.

Good data analytics creates insights that are actionable. As a result, poor data output carries significant risk for any resulting actions. The below factors create issues for any analytics team, and often result in data quality issues.

Data Silos

Data silos occur when data is isolated within specific departments or systems and is not shared or integrated with other departments or systems. For example, if marketing and sales data is not centralized in one CRM or CDP, marketing might define campaign attributes with a subset of necessary customer data. Similarly, if one database is managed separately, updates or corrections might be made in only one source leading to inaccurate or unmatched records. And with separate system administration, specific data elements could be labeled or formatted differently. All of these issues create incomplete, inaccurate, or inconsistent data. And any planning or actions resulting from analysis could be flawed. Finally, quality-aware analysts could try to gather and integrate data appropriately from different systems. However, different management and access protocols could slow down data gathering, cleaning, deduplication, and transformation. As a result, the delays in decision-making result in missed opportunities and even lost revenue.

Lack of Expertise

Data analytics is a complex process that requires a wide range of skills and expertise, such as statistics, programming, and domain knowledge. Lack of expertise in any of these areas can have a significant impact on data analytics, leading to inaccurate insights and poor decisions.

Analysts without detailed understanding of data quality factors may not successfully clean and validate the data when beginning. If they are analyzing sales data to plan inventory and marketing, data entry errors may go unnoticed. Simple inconsistent labeling between systems might drive false conclusions on sales figures that drive manufacturing and advertising. Similarly, an analyst unfamiliar with A/B testing might recommend optimizations that are counterproductive.

Because applied data analytics is entangled with a wide field of applications, an analyst might work outside of their domain. For example, an analyst working to assess customer behavior in the healthcare industry might lack experience in the healthcare domain. The result may be misinterpreted data and incorrect conclusions about patient behavior.

Strong analytics can help businesses identify opportunities, whether new markets, customer segments, or offering ideas. When analysts work with expertise gaps, they may miss these opportunities, costing significant revenue. Moreover, if analysts are aware of expertise gaps, they might take the additional time to work through gaps conscientiously. However, this can also be detrimental for time-sensitive optimizations.

Data Overload

The increase in processing power and data accessibility in the past decade has driven the power of analytics and insights. However, the resulting large amounts of data that enable analytics can lead to data overload, making it difficult to extract meaningful insights.

As analysts are confronted with increasingly unwieldy datasets, they may not be able to sift through it all to identify meaningful insights. For example, a business with large amounts of customer data may be challenged to identify key trends in customer behavior or preferences. Additionally, large datasets can make data analysis time-consuming as analysts sift through volumes of data. For example, if a business has large amounts of sales data, analysts may spend a significant amount of time analyzing the data to identify key trends, such as top-selling products or customer segments. This can be time-consuming, leading to delays in decision-making. In many companies, data volume is immense and widespread.

If a business has customer data in separate social media platforms, marketing automation systems, and a CRM, the challenge of integration may prohibit a unified view of the customer. This can lead to incomplete insights, as analysts overwhelmed by scattered data volumes may not be able to see the complete picture of the customer.

Finally, data overload can lead to analysis paralysis, where analysts may become overwhelmed by the sheer volume of data, unable to move forward and reach decisions. The delay or failure to analyze or act can lead to missed opportunities and lost revenue.

Inaccurate Assumptions

Data analytics relies on accurate assumptions to derive meaningful insights and inform decision-making. However, it’s important to be aware of the limitations of data analytics and the potential for inaccurate assumptions to arise.

Inaccurate assumptions can lead to biases, which can impact the accuracy and reliability of data analytics. For example, if analysts assume that a particular group of customers behaves in a certain way, they may overlook other critical factors that influence customer behavior. This can lead to suboptimal decisions and failure to capture revenue or optimizations.

Correlation vs. Causation

One of the most common mistakes in data analytics is assuming that correlation equals causation. This means that just because two things are related, it doesn’t necessarily mean that one causes the other. For example, data might show that there is a correlation between ice cream sales and shark attacks. However, it would be inaccurate to assume that buying ice cream causes shark attacks. Instead, there may be a common underlying factor, such as warm weather, that causes both ice cream sales and an increase in people swimming in the ocean.

Bias in Sampling

When data used is not representative of the entire population, the analysis suffers from sampling bias. For example, if a company wants to analyze customer satisfaction, but only surveys customers who have already had a positive experience, the results will be biased and not reflective of the entire customer base.

Assumptions can drive misinterpretations if variables are not included or controlled. For example, data might show that a campaign led to an increase in sales. However, without accurate attribution, the sales might have increased regardless of the campaign. In other cases, external factors such as seasonality might drive the results.

Assumptions can also impact complex analytics, such as machine learning algorithms. For example, an analyst can overfit a model by training on too small of a dataset, but then applies to a much larger population. The patterns learned are specific to the sample and result in inaccurate predictions when applied to a new dataset.

Finally, preexisting assumptions on data validity, causes, or variable relevance can drive poor decisions.

- Assuming a particular datasource is reliable without checking data accuracy

- Assuming that a specific variable is irrelevant and failing to include in the analysis

- Assuming a trend is driven by a specific factor and omitting other factors from consideration

While data analytics is a powerful tool, it’s important to be aware of its limitations and potential for inaccurate assumptions. By avoiding common bias and assumptions data analytics can be used to make informed, reliable decisions that increase performance.

Inadequate Tools

The effectiveness of data analytics is highly dependent on the tools used. Tools and technologies that limit data access prohibit accurate and timely decisions. For example, legacy platforms disconnected from one another can either block, limit, or delay analytics-driven decisioning. Older or misaligned tools can slow data processing, making it difficult to analyze large amounts of data.

For example, if a business has large amounts of data, and is using outdated data analysis tools, such as Excel spreadsheets, it may take a long time to analyze large datasets. The delay or even inaccurate results can be costly in fast-paced industries, such as finance or e-commerce. Finally, even traditional, integrated tools can lead to incorrect insights. For example, tools that lack advanced statistical analysis such as regression analysis or predictive modeling may not be able to identify trends or make accurate predictions.

In the best case scenario, inadequate tools lead to missed opportunities, either through lack of depth or delay in analysis. In the worst case scenario, such tools (e.g., manual entry or poor storage systems) can lead to data loss through either human error or system failures.

Business Misalignment

While data analytics is recognized as essential in most businesses, the insights are valuable only if they can be translated into action. However, translation to action requires integration with business processes, which may be misaligned.

Business processes can be siloed across the organization, with different processes for each dataset owner or data-producing function. Each department may use different analytics processes without a way to standardize or integrate. As a result, insights can be incomplete without access to all relevant data for a unified view of the customer.

Business processes may be more advanced than the analytics tools in use. For example, a business using analytics tools not integrated with a supply chain management system may not recognize disruptions in real-time. Without integration into actual business processes, analysts may not be able to accurately identify trends or make predictions.

Business alignment can impact all phases of data analytics projects. Key performance indicators (KPIs) from analytics must align with business operations. If a business goal is revenue growth, it needs to establish key metrics such as average order value, customer acquisition cost (CAC), or lifetime value (LTV). If different parts of the business are misaligned, these KPIs will be challenging to define or analyze. If an organization is not aligned across divisions, the datasets are likely distributed and disparate across functions.

Business alignment is also essential to ongoing performance management. Effective data analytics requires ongoing monitoring and optimization to ensure that KPIs are being met. For example, if a business objective is to increase customer satisfaction, it may need to regularly monitor customer feedback and identify areas for improvement. Without alignment, it may be challenging to identify the root causes of customer dissatisfaction or implement effective solutions. Finally, after analysis identifies opportunity, it may be impossible to obtain agreement required for decision-making and action.

Poor Visualization

While visualization may seem a minor component of analytic output, poor or limited data visualization makes it difficult for analysts to convey insights and trends in an easily understandable manner. If a business has data stored in multiple systems, available tools may not be able to create views that capture the full breadth of the dataset results. Visualizations can surface insights in a way that simple tabular data cannot. As a result, good visualizations are a method of easily uncovering insights. Visualizations that are cluttered, missing relevant data points, or are poorly labeled will limit stakeholders’ understanding, lose context, and ultimately create incorrect conclusions. As a result, actions will either fail to seize opportunities or be counterproductive.

Inadequate Security

While challenges with data analytics typically fall into failure to uncover accurate insights or convert to action, data security issues create ramifications beyond missed opportunity.

Data analytics relies on access to sensitive information, making it vulnerable to security risks. Inadequate security measures can significantly impact data analytics and put organizations at risk of data breaches, cyber-attacks, and other security threats. Recent legislation and the ongoing deprecation of third-party cookies has highlighted the risks when data security is not understood or planned well.

Inadequate security measures can lead to data breaches, which can compromise sensitive information. For example, if data is not encrypted or protected by strong passwords, it can be accessed by unauthorized users. Resulting financial losses, reputation damage, and legal liabilities can be catastrophic for a brand.

Inadequate security measures can also leave data analytics vulnerable to malware and cyber-attacks. For example, if data is not protected by firewalls or antivirus software, it can be infected by viruses or other malicious software. Data loss, system downtime, and disruptions to business impact the entire organization. Moreover, malware and cyber-attacks can compromise the integrity of data analytics, requiring significant recovery before insights can regain trust.

While external threats may be foremost for brands, poor security protocols and training can also leave data analytics vulnerable to insider threats. For example, if employees have unrestricted access to sensitive information, they may be more likely to misuse it for personal gain or inadvertently compromise it. This can lead to significant losses for the organization, as well as impact the accuracy and reliability of data analytics.

Finally, in addition to threats to business continuity, brand profile, and data analytics, inadequate security measures can also impact regulatory compliance, which can result in significant legal and financial liabilities for the organization. For example, if data is not adequately protected or is accessed by unauthorized parties, the organization may be in violation of various data protection laws and regulations. This can lead to significant penalties and damage to the organization’s reputation.

Ad Platform Limitations

For many businesses just beginning their data analytics journey, existing ad and analytics platforms provide both the entry point and the constraints of analysis. While Google Ads or Google Analytics provide accessible analytics, siloed platforms also drive default metrics and limiting standards.

Ad platforms such as Microsoft Advertising, Amazon Advertising, or Google Ads are made to drive advertising; as a result, data analytics choices can be limited and inadvertently narrow analysis choices. For example, most ad platforms limit access to raw data. Instead, these platforms provide advertisers with aggregated data and pre-defined metrics through its reporting interface. Raw data is crucial for advanced analysis, such as creating custom metrics, identifying patterns, or conducting sophisticated statistical modeling.

Additionally, ad platforms limit attribution modeling to determine efficacy of different touchpoints along the customer journey. The most powerful models are data-driven “black box” models that cannot expose the algorithms or weights of different parameters and touchpoints. The simplest (and often popular) models are single-touch, such as first-click or last-click.

Finally, cross-channel analysis is limited to those channels an ad platform can access, leading to a bias toward the ad channel of the platform itself. For example, while Google Ads offers some integration with Google Analytics, it still limits cross-channel analysis. Advertisers often use multiple ad platforms and channels to reach their target audience. However, an ad platform’s limited integration capabilities hinders marketers’ ability to analyze holistically and gain a complete view of marketing efforts across all channels. The use of full-funnel omni channel attribution, like Arcalea’s Galileo, can overcome these limitations and drive revenue and efficiencies. By providing an unbiased full customer journey analysis, Galileo isolates performing assets and tactics for multiplying ROI.

Data analytics can be a powerful tool for organizations to gain insights and make informed decisions. However, to unlock its full potential, businesses must overcome common challenges. Brands at all levels gain by constantly evaluating their data practices and overall data science maturity levels. Aware and proactive organizations can gather the greatest insights from analytics data and gain a competitive advantage in their industry.

The Right Tool for Attribution.

Designed for today’s market complexity, full-path attribution unlocks exponential growth value. Arcalea’s Galileo activates marketing data across any marketing mix, maximizing return in each channel. Gain full views of your buyer journeys and increase ROI 20-40%.

Designed for Revenue.

Selected Reading

Besbes, Ahmed, (2020). 9 Common Mistakes Beginner Data Scientists Make. Toward Data Science. https://towardsdatascience.com/9-common-mistakes-beginner-data-scientists-make-91255ddd1311

Gargiulo, Michael, (2022). Data Security Threats: What You Need to Know. Forbes. https://www.forbes.com/sites/forbestechcouncil/2022/05/16/data-security-threats-what-you-need-to-know/

Kamat, Vedita, (2021). A Complete Beginner’s Guide to Data Visualization. https://www.analyticsvidhya.com/blog/2021/04/a-complete-beginners-guide-to-data-visualization/

Magoulas, R., Swoyer, S. (2020). The State of Data Quality in 2020. O’Reilly. https://www.oreilly.com/radar/the-state-of-data-quality-in-2020/

Nelson, Mark, (2022). What Does Data-Driven Decision-Making Really Mean? Forbes.

O’Toole, Tom, (2020). What’s the Best Approach to Data Analytics? Harvard Business Review. https://hbr.org/2020/03/whats-the-best-approach-to-data-analytics

Rietsch, Dietmar, (2022). Breaking Down Data Silos: Five Essential Best Practices. https://solutionsreview.com/data-management/breaking-down-data-silos-five-essential-best-practices/

Sainam, P., Auh, S., Ettenson, R., & Jung, Y.S., (2022). How Well Does Your Company Use Analytics? Harvard Business Review. https://hbr.org/2022/07/how-well-does-your-company-use-analytics

Southekal, Prashanth (2023). Data Quality: Empowering Businesses with Analytics and AI. Wiley.

Southekal, Prashanth (2023). Three Key Misconceptions of Data Quality. Forbes. forbes.com/sites/forbestechcouncil/2023/04/04/three-key-misconceptions-of-data-quality/