The Tech Behind Predictable Growth

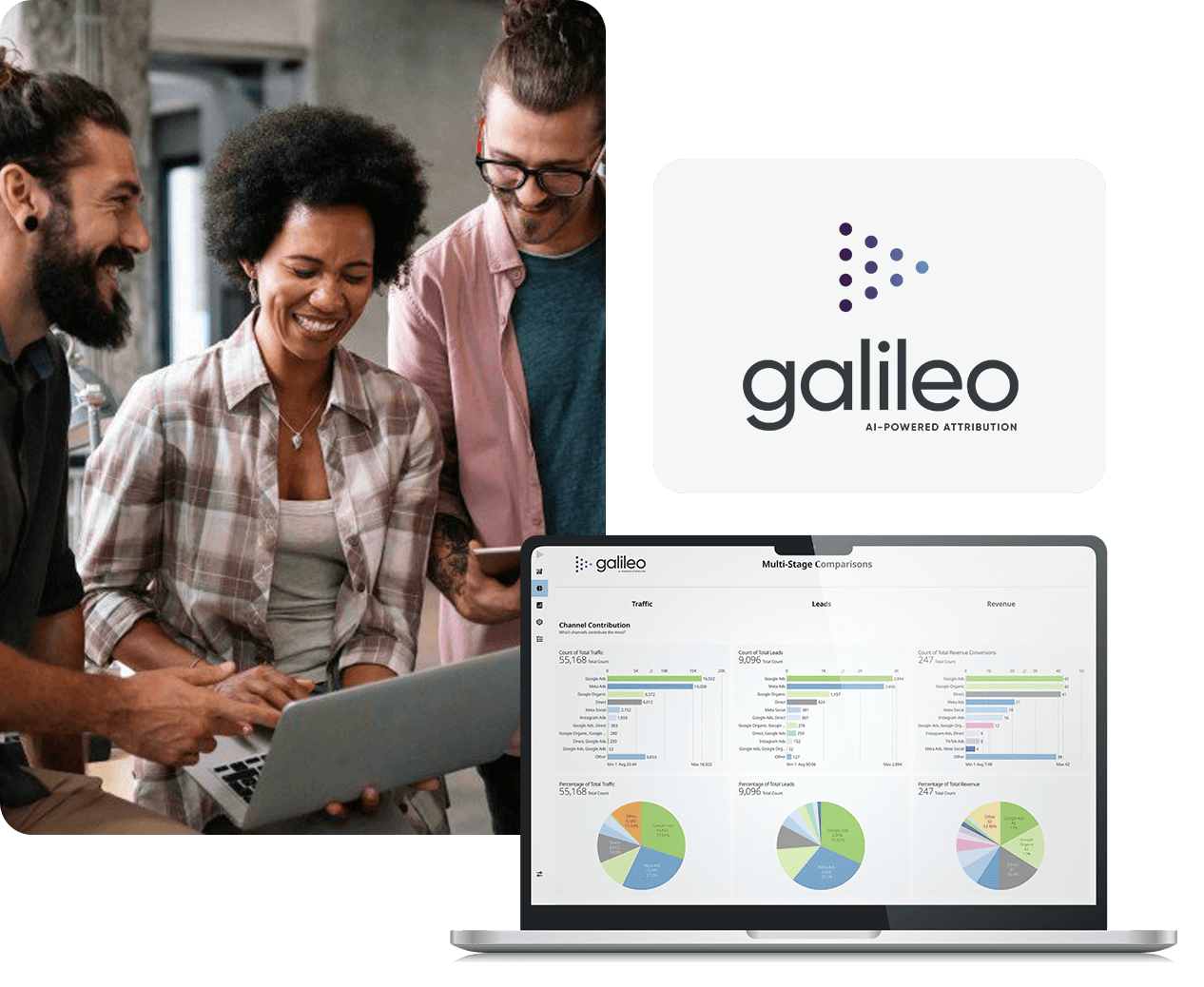

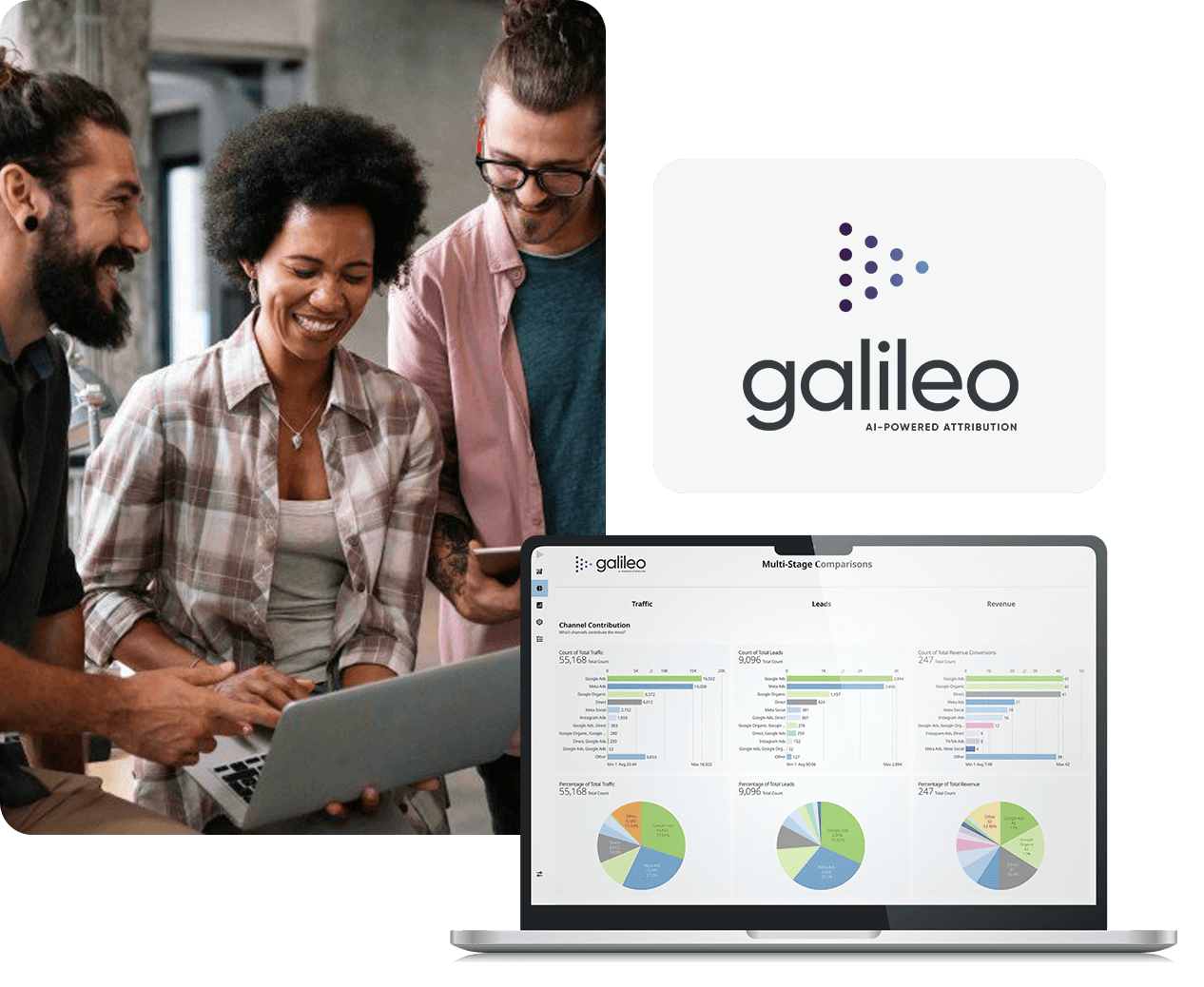

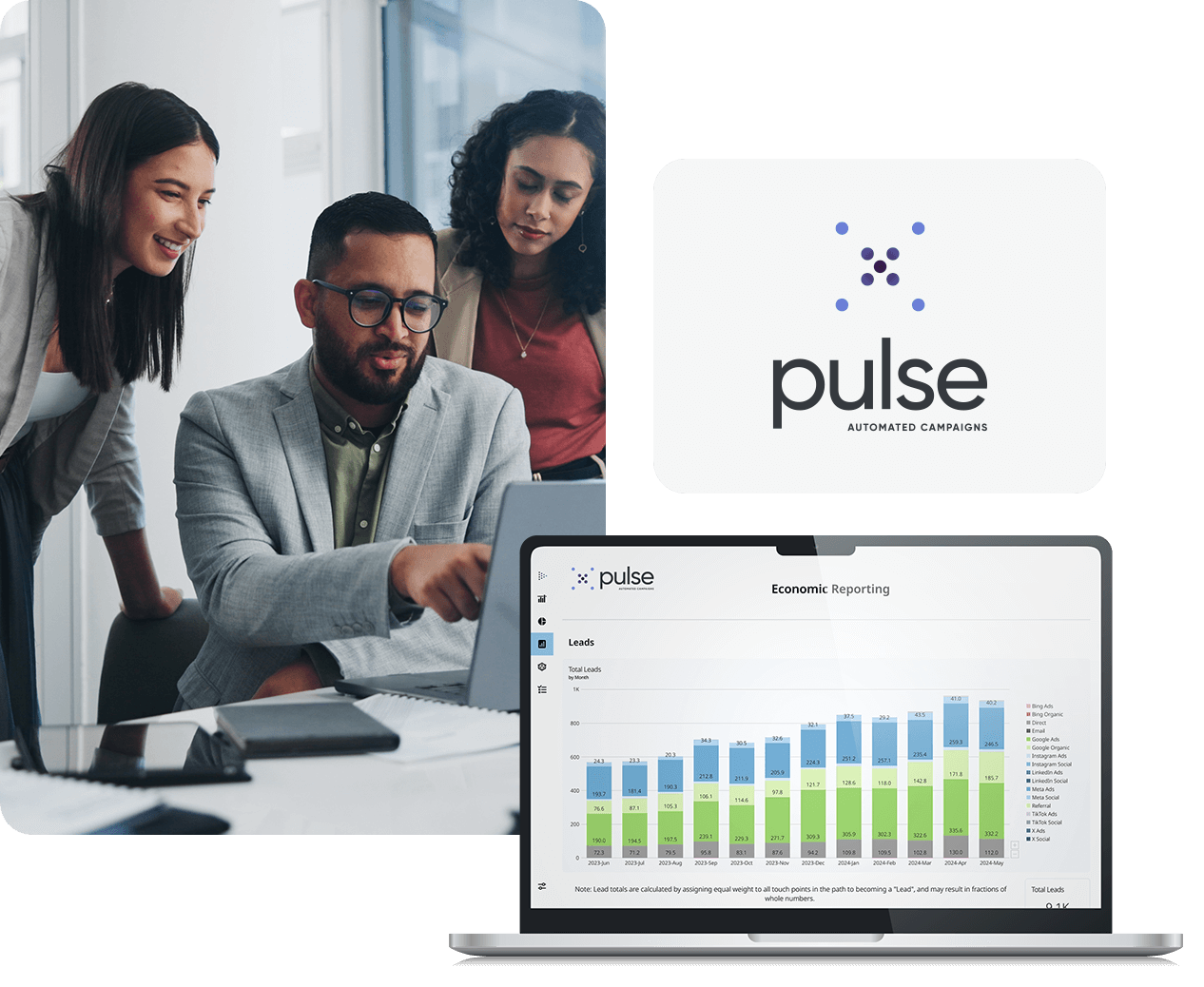

We connect every campaign to revenue using Galileo, so you know exactly what’s working, and what’s wasting your budget.

Success is now a science.

As a Google Premier Partner, Arcalea ranks among the top 3% of all Google advertisers worldwide. This elite status reflects our proven performance, expert knowledge, and consistent client success across Google Ads. When you work with us, you’re partnering with a team that’s recognized at the highest level for digital excellence.

"Finding a trusted partner is difficult in any space, with digital even more so. Arcalea's experience was completely different: and has ultimately been a game-changer for us."

"With Arcalea, it was all about strategy. Today, we're realizing increases in search and online presence, evaluating and understanding audience paths to conversion, and creating new value to our audiences by improving the value we provide online. We couldn't be more excited moving forward."